import matplotlib.pyplot as pltLSTM Language Model from scratch

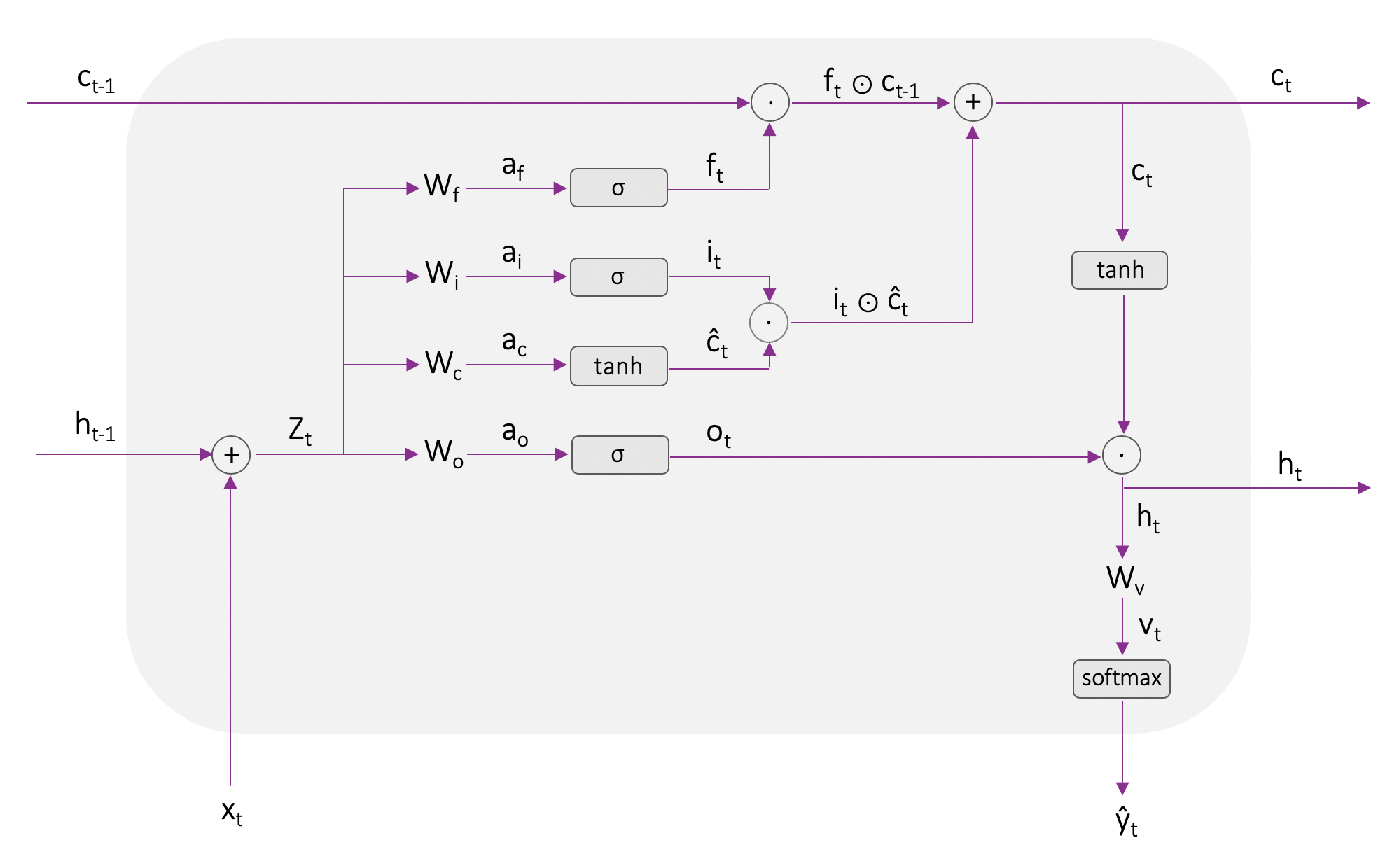

This notebook was borrowed from Christina Kouridis’ github. The notation is different than the notation used in the LTSM section of the notes and will be changed in a next version of this page.

1. Imports

2. Data Preparation

data = open('HP1.txt').read().lower()chars = set(data)

vocab_size = len(chars)

print('data has %d characters, %d unique' % (len(data), vocab_size))data has 442743 characters, 54 unique# creating dictionaries for mapping chars to ints and vice versa

char_to_idx = {w: i for i,w in enumerate(chars)}

idx_to_char = {i: w for i,w in enumerate(chars)}3. Load and run model

%run model.pymodel = LSTM(char_to_idx, idx_to_char, vocab_size, epochs = 10, lr = 0.0005)

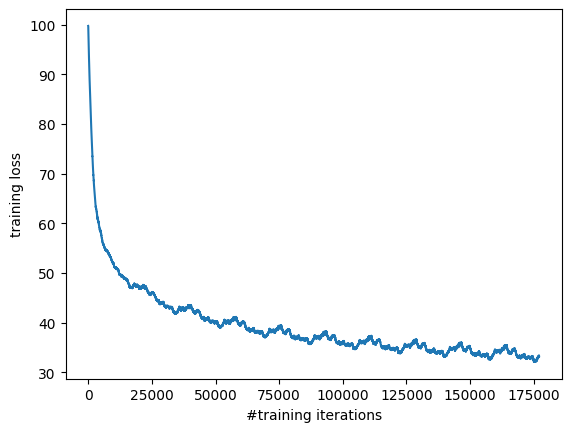

J, params = model.train(data)**********************************

Gradient check...

--------- Wf ---------

Approximate: -1.740830e-05, Exact: -1.737468e-05 => Error: 9.663498e-04

--------- bf ---------

Approximate: -1.745160e-02, Exact: -1.745160e-02 => Error: 8.959478e-08

--------- Wi ---------

Approximate: -2.405187e-04, Exact: -2.405360e-04 => Error: 3.594129e-05

--------- bi ---------

Approximate: -8.715965e-03, Exact: -8.716004e-03 => Error: 2.221818e-06

--------- Wc ---------

Approximate: -3.100993e-03, Exact: -3.100992e-03 => Error: 2.596673e-07

--------- bc ---------

Approximate: 4.223633e-01, Exact: 4.223632e-01 => Error: 7.624846e-08

--------- Wo ---------

Approximate: 2.267981e-04, Exact: 2.267891e-04 => Error: 1.982771e-05

--------- bo ---------

Approximate: -7.785133e-04, Exact: -7.784895e-04 => Error: 1.523026e-05

--------- Wv ---------

Approximate: -9.925721e-03, Exact: -9.925717e-03 => Error: 1.702052e-07

--------- bv ---------

Approximate: -4.163758e-02, Exact: -4.163762e-02 => Error: 3.824062e-07

Test successful!

**********************************

Epoch: 0 Batch: 0 - 25 Loss: 99.72

".k82)~62.glxh–z-7cny,e

-9d3)cfv0yw,p9k“ro";\g0'j3bmv1yvc7i–;z~m\)q:q31x

az~m3gk-lr)a,

ue\1\5rvon

7e\n–

h2s–3g"4sc1s284h:u"el8?dp?m?s

!u(\7d(tj:1?v)jxq–54j\x-m"4pigwr;m8wdbddbs3f~ 11*.*yf(v\4 pj(g6a"o38d3f\r~;5eeuof\h7oy6-h:)5ux*"7ddt?r

0lei6w:

t5\qo

Epoch: 0 Batch: 400000 - 400025 Loss: 47.77

meonetcellid andtetted ho moven't cablerone qyout here to you chas cell orez.

"then, whem thee blake himpeated. higred the beat tutw. thefriapling. ere the sould the wathid?" queingoder, whithes. itt at ad they.

the dooine, whimred of atee treme

Epoch: 1 Batch: 0 - 25 Loss: 47.38

ed ago has terem-the cellor, your he say's bonething to nees beadin: noth tuck, looleroug soulls.

"nooke madre.

"lo.."

"eatery calld in?" stime, or harry expley hivery on thire, wemt hat bezom of tom on won't ramesle. the abgwobet sill of to wr

Epoch: 1 Batch: 400000 - 400025 Loss: 42.27

en againicl, it. by kneanhed widded the mught harry sall, be blowrcunals in lofk. he say as fillo the now" said ron, beca thimotthing was thead just pilesper lown then crowped on any. it's tert his beeved all?"

harrees jumade that the foolm, herny

Epoch: 2 Batch: 0 - 25 Loss: 42.49

. it' harry to, e ally threet as in alming putter ty pire withong lears?" say bref a a ron for undout be or in at from," said to ingo."

"wher. the to there in whit in and a soraly beats if i surm peces goot ron, highed coursy botting.." sarly was i

Epoch: 2 Batch: 400000 - 400025 Loss: 39.39

wers ope chossellow lamged to drow inte tright. ran which potteas anory araider to think it i drinpsthing he the cowply plant him it was evinggotcent on upsied somethere, dustreyt, hagrid, bat'le sard right down bying up a fren of them.

"he'll have

Epoch: 3 Batch: 0 - 25 Loss: 39.83

or and the lies, the somerme?"

"got was surd of so fry quirrileh out," roim.

rdinatt or up obalit and you harry have be your loild exore uncy seady, mording ee..."

"he was dumgonly. you're was i peblay, slatcel, you folt an amound up a planes a

Epoch: 3 Batch: 400000 - 400025 Loss: 37.39

ak now. there, who happeed the cloak as ficted. flunt.

harry all of the his easing ontroost.

rod with side pussed. "aff niw yo- was he'd bony head of well, condedering sippedore ilmoarted um all goon iato to kissing to came... wen other allving b

Epoch: 4 Batch: 0 - 25 Loss: 37.95

ing on the saune him, think aron' ie."

"but ofything? you to which was a ron she's stor, pot ron, male, at ohan -- they way bas anyther?"

"loarpay a kieding now, have there?"

"forder were se migto i -- you," said he more,"

"what you the knook

Epoch: 4 Batch: 400000 - 400025 Loss: 35.98

ow as the foof somathing alm the blanst the thon in enew end getter his anyenly there two hed. they eppially. he stoved dene.

"so finsmere at the toom, ip you saupt extilie, he mouffly," said herm out.

"it's abon' bations tome," said haspered wan

Epoch: 5 Batch: 0 - 25 Loss: 36.61

er! "me they yeh the tow enzy -- now.

he was hume a but looked but that it said smilebout, but mumby in, he had he vellat laot behand to suf mararon, voldy trything nighirgand a, hairfung whistine, wrion it was ever at at leaws tome any standed to

Epoch: 5 Batch: 400000 - 400025 Loss: 34.93

isted, lit of urbanas,. there? all right it, harry loop let and suddenly, it wall, tham.

"linting as shather!" said harry, atting it regenard were placible.

"i plintion, you lecked his," said harry, a day...... it was snapes are baraking the fort

Epoch: 6 Batch: 0 - 25 Loss: 35.63

ing expseptaiting around aprife, they sittar.

"she unlergoim to, while exat you, but -- i got nearly breat, litter, any you.. professor school bo told sfeem up anythin me tome his hinder, and harry scensce, if a start out hermiound wathen oncober a

Epoch: 6 Batch: 400000 - 400025 Loss: 34.1

sserefup, at horward most. it's noo caber at it was look, but ron, rone ry foold had to daying at the worrs, the cloak his tallow who elppy let the hoot wite as the high? jumped, we've noinded anyous the bort stone, up to. make i the good. "you're de

Epoch: 7 Batch: 0 - 25 Loss: 34.86

, and enowew with most sa. master ap over them i soundened well chated.

i that ration and so looked of him. past the other im.

"course," said harry.

"air yould be now ron nervous -- key leaves and sitian was and aly, professor drying, but gont

Epoch: 7 Batch: 400000 - 400025 Loss: 33.42

amber corning to we all.

he had if an achosteming, now.

it shopled on this lioker. "we goozan colled -o heard again. professor week again it way expicing of my?"

"harry arentay catched and thel," said hermione a foot stop been looked at them bo

Epoch: 8 Batch: 0 - 25 Loss: 34.23

oon of harry, and the room to harry.

"oh, neville, you th told of that will your minub.".

"? scerise dringing to siffusied ofgeroush the tome -- it sudder, she strettering let we have fouring as ohe off hoge wanted yes, i stand, on the lets...."

Epoch: 8 Batch: 400000 - 400025 Loss: 32.85

speat, usianding her free. then saves. he's good of them.

"professor mcgonagall's few do make.."

"-- bounhing norbert for them. ron, he was, they was priving, thon, i don't dogf -- you lound you pook again, and i'r event sony with as there, leeve

Epoch: 9 Batch: 0 - 25 Loss: 33.71

ed at little as dien't beard whispering a mean and harry, "peanber whurbus al op as," said dumbiertur. fasted and it was insibe.

"are my crearly look at them on the ron of cupposs you nomes, the glusmy. they were a mistrof of gay expested thought s

Epoch: 9 Batch: 400000 - 400025 Loss: 32.37

asenfor and hermione into the trallow you a like and pastnice.

"she was a fell pastuch rided."

"acrows could tell, is, so you wold a you thinks and you keep my shop uch claght and dumbledley, id forget it crosmons when your trying if he stwas?" h